Introduction

I was apart of a ten week, interdisciplinary research program at the Qualcomm Institute (formerly known as Calit2), a non-academic university research unit at the University of California, San Diego.

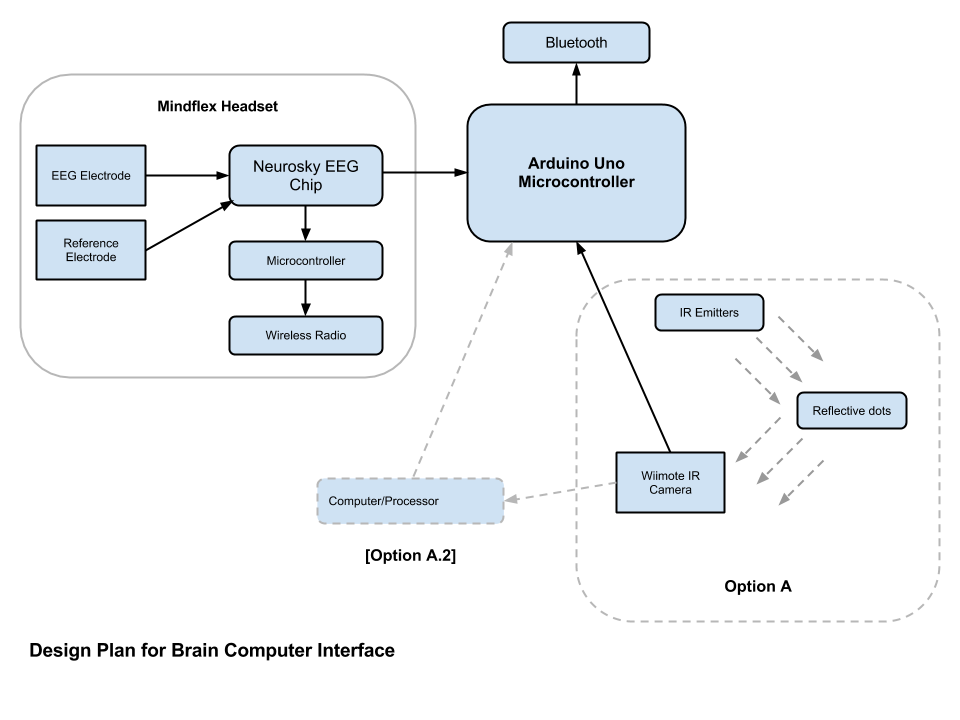

For my project, I explored how open-source technologies can be leveraged for neurofeedback applications. Specifically, I made (and am continuing to make) a Brain-Computer Interface (BCI) game for children with Autism Spectrum Disorders. My project was inspired by research conducted by the Department of Psychology at University of Victoria, British Columbia, and Institute for Neural Computation at UCSD. The research is now known as Let's Face It!, a facial-recognition-based video game whose purpose is to teach ASD children how to recognize and mimic facial expressions. Players have to match the facial expression of the icons on the screen in order to get past obstacles in a maze. This video provides a good summary of their research.

The Interface

How To Make Your Own!

Parts

Putting it Together

Next Steps

Research

Acknowledgements

- Virginia de Sa and the de Sa Lab

- Saura Naderi, Cameron Dechant, and Maurice Harris of MyLab

- San Diego Electronic Supply

- Eric Mika of Frontier Nerds

- "Kako" or the ghost everyone keeps citing as the original Wii Remote hacker